A popular use of Salesforce is as a front end system of engagement application, using a lookup integration to the more static system of record data in a back-end such as SAP. I wanted to set up a demo to show this but I didn’t have access to an SAP environment so I decided to simulate the back-end by creating a Postgres database in Heroku.

I decided to use some publicly available open data on properties as the database and for this to be looked up dynamically from the account record in Salesforce.

Here are the steps I went through.

First get a Heroku account. Go to heroku.com and ‘sign up for free’

Then login.

On my computer I installed node.js from https://nodejs.org/

And npm from https://github.com/npm/npm

And the Heroku toolbelt from https://devcenter.heroku.com/articles/getting-started-with-nodejs#set-up

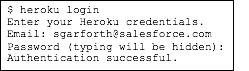

Once installed, you can use the heroku command from your command shell. Log in using the email address and password you used when creating your Heroku account:

Execute the following commands to clone the sample application:

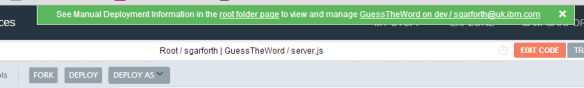

You now have a functioning git repository that contains a simple application as well as apackage.json file, which is used by Node’s dependency manager.

Now we create an app on Heroku, which prepares Heroku to receive the source code.

When you create an app, a git remote (called heroku) is also created and associated with your local git repository.

Heroku generates a random name (in this case glacial-sierra-8855) for your app.

The package.json file determines both the version of Node.js that will be used to run your application on Heroku, as well as the dependencies that should be installed with your application. When an app is deployed, Heroku reads this file and installs the appropriate node version together with the dependencies using the npm install command.

Run this command in your local directory to install the dependencies, preparing your system for running the app locally:

Now we add a free Heroku Postgres Starter Tier dev database to the app.

Now we need to add Postgres to the path:

I found some sample data of properties here.

Download the CSV file to your current directory and delete first line so there’s only one header.

Now add an index column; so in Excel insert a new column, put 1 and 2 as the first two entries and then highlight these and drag the mouse down so that it auto populates the other records with increasing indices:

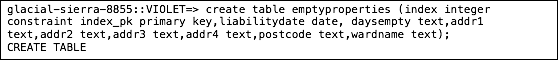

Now we create the database table in the same format as the spreadsheet.

Run a select statement to check it worked:

Set the date format to be UK format like the spreadsheet.

Then copy the spreadsheet into the database:

So now we have an app, a database and a table. In order to access it from Salesforce we need to request that the database be enabled as an external object by raising a ticket at https://help.heroku.com/

You will need to provide Support with the application name (in this case glacial-sierra-8855).

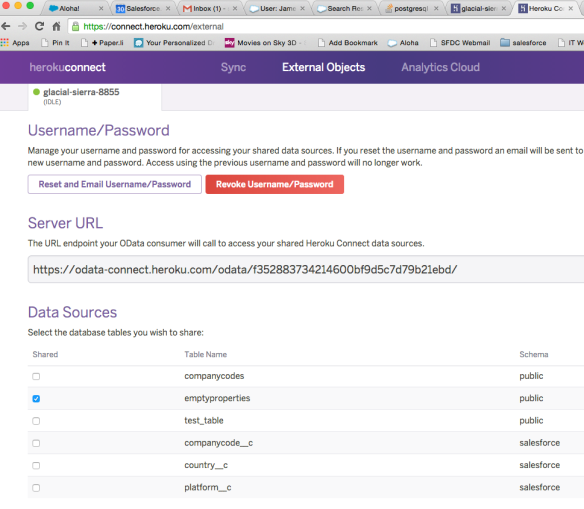

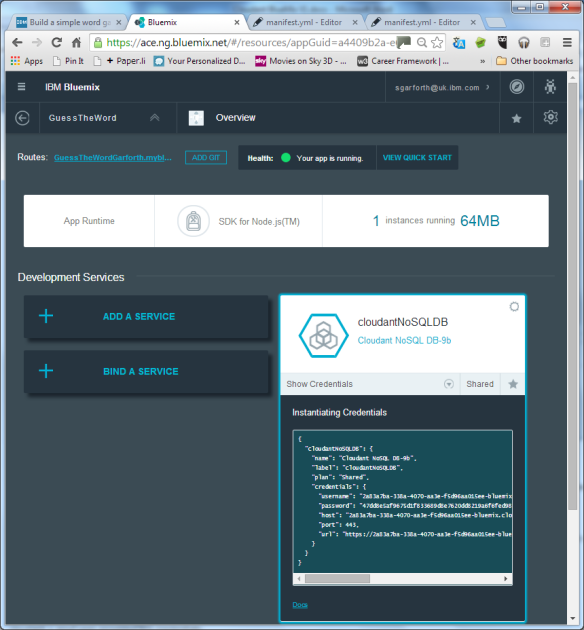

Once external object support has been enabled go to https://connect.heroku.com/

and set the app as a Heroku Connect Instance and get the username, password and url for it.

Select the ’emptyproperties’ data source to share:

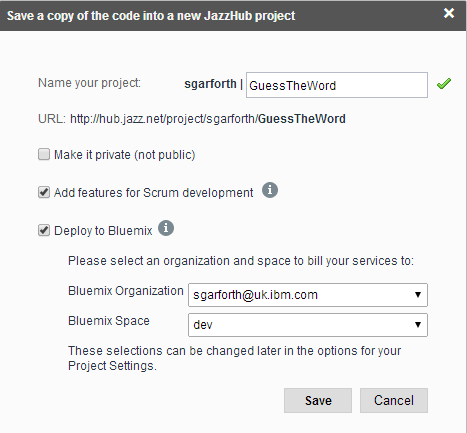

Now, to connect to this from Salesforce you need a Salesforce org with Lightning Connect/OData enabled. If you request a developer environment from here then you will get this functionality automatically https://developer.salesforce.com/signup

In your Salesforce org:

- Click Setup (upper right corner)

- Click Develop > External Data Sources (left navigation)

- Click New External Data Source

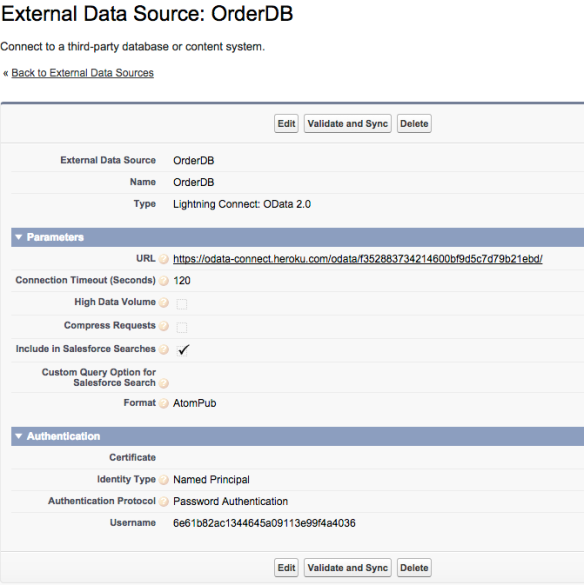

- Enter OrderDB as the Label. As you click or tab away from the label field, the Name field should automatically default to OrderDB.

- Select Lightning Connect: OData 2.0 as the Type.

(OrderDB doesn’t have to be the name, choose something meaningful for you).

Enter the url, username and password from heroku

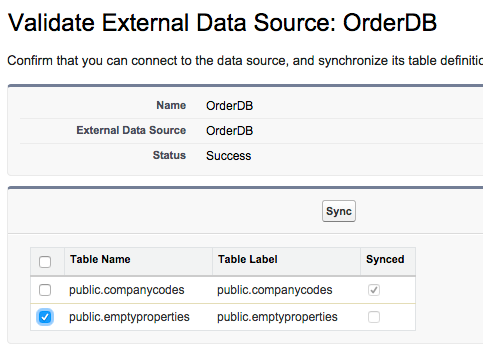

- click ‘validate and sync’

- and select the ‘emptyproperties’ table

- and select ‘sync’

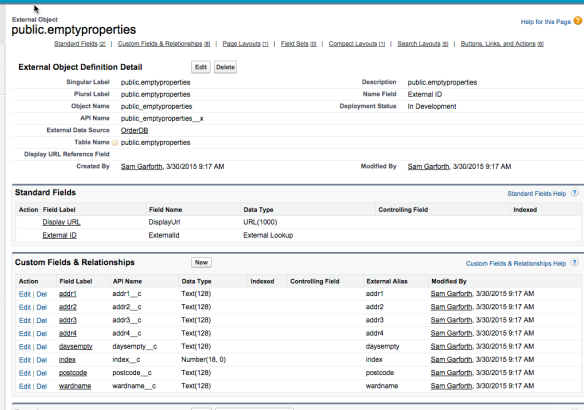

Then click into the ’emptyproperties’ external object

You should be able to see that all the fields have been picked up from the database:

You can now create a Custom Tab to Easily Access properties

- Click Setup (upper right corner)

- Click Create > Tabs

- Click the New button next to Custom Object Tabs.

- Select properties as the Object.

- Click the selector next to Tab Style and choose whichever style you like.

- Click Next.

- Click Next to accept the default tab visibility settings.

- Choose the apps that you want the tab to be included in.

- Click Save.

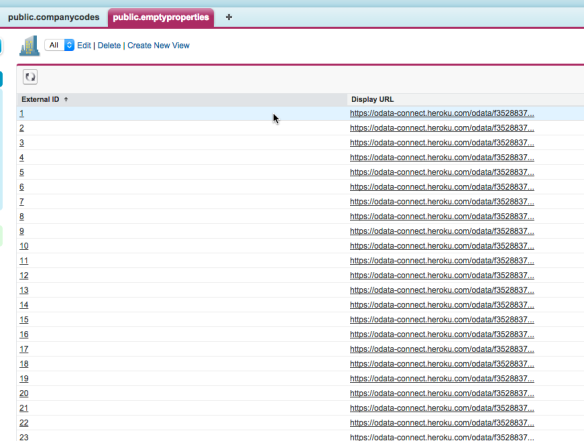

Now there will be a new tab:

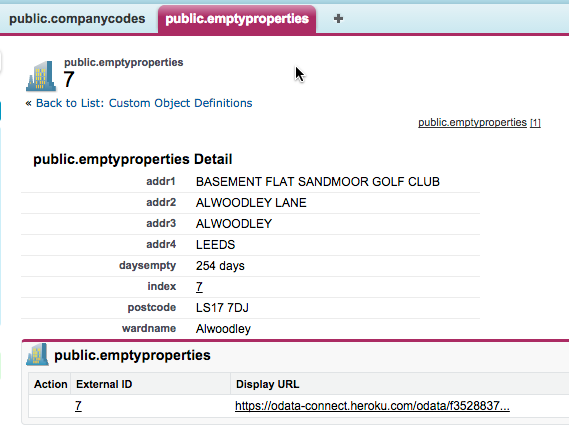

Click on ‘go’ to view all. All the records are now accessible in the database by clicking through the external ids.

Now go back to ‘external objects’ where you were just before creating the tab.

Now we want to make the index an external lookup. Click ‘edit’ next to index.

Select “change field type”:

- Select External Lookup Relationship and click Next. An external lookup relationship can link any object to an external object.

- Select emptyproperties as the value of Related To and click Next.

- Enter4 as the value for Length and click Next.

- Enable theVisible checkbox to make the relationship visible to all profiles, and click Next.

- Click Save to accept the defaults – you definitely want an ‘OrderDetails’ related list on the Orders page layout!

Now go to the properties tab and select an external id and the full property detail is displayed:

[Edit: if the property details don’t show up the you will need to go to the user’s Profile and enable READ in the FLS of your External Data Object fields.]

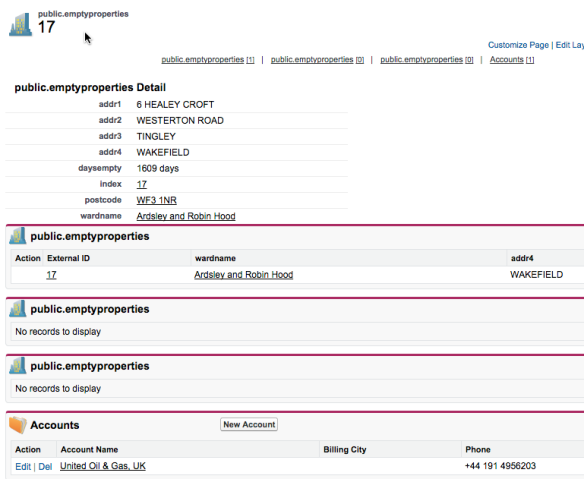

Now let’s assign properties to accounts. Se we’ll edit the account record and add a property

- Setup, customize, accounts, fields

- New custom field

- External lookup

- Select the properties but change the field label just to Property

- Step through and save it

- Now when we go to an account we see an empty field for Property

If we edit the field and put an index in it becomes a link to the Heroku properties database

Now the property also shows a link back to the account from the property:

And that’s it. We now have accounts in the Salesforce CRM system with real-time lookups to the system of record in a Heroku database.

r the last three years or so and have seen dramatic changes in the

r the last three years or so and have seen dramatic changes in the

Cloud is consumption model. It’s the idea of taking away all the IT skills and effort required by a user and letting them focus on their actual functional requirements. All the IT detail is hidden from them in The Cloud. Smart phones and tablets have really helped consumers understand this concept. They’ve become liberated. Knowing very little about IT they have become empowered with self-service IT to access functionality on demand. Within seconds they can decide that they want a business application, they can find it on an app store, buy and install it themselves and be up and running using it. When they’ve finished they can delete it.

Cloud is consumption model. It’s the idea of taking away all the IT skills and effort required by a user and letting them focus on their actual functional requirements. All the IT detail is hidden from them in The Cloud. Smart phones and tablets have really helped consumers understand this concept. They’ve become liberated. Knowing very little about IT they have become empowered with self-service IT to access functionality on demand. Within seconds they can decide that they want a business application, they can find it on an app store, buy and install it themselves and be up and running using it. When they’ve finished they can delete it.