Originally published here on July 12, 2022.

Continuous Integration and Continuous Delivery (CI/CD) is perhaps best represented by the infinity symbol. It is something that is constantly ongoing, new integrations are rolled out while not interrupting the flow of information that is already running, as to stop systems in order to update them can be costly and inefficient.

In order to ensure that you can successfully implement the latest builds into your system, it is important to know how well they will run alongside the components that are already installed and where there may be bottlenecks.

The method of figuring this out is called performance benchmarking and is generally the final step in testing before actually deploying a new integration to the live software environment.

Benefits of Performance Benchmarking

Find and Improve Slow Code

Before any new software update goes live, the development team should be looking at the performance benchmarking software in order to identify the areas where there is slow or inefficient code and strategizing about how this can be improved upon.

Automated Regression testing

This method of testing runs automatically and centres on testing processes that guarantee software is recompiled correctly after an update. In addition, there is also testing for the workflow or the core logic of the software, with the aim of identifying whether the software is still functionally correct. It is also important to test all other supporting services that rely on and interact with the core software.

Benchmark All Aspects of your System

Good benchmarking software will allow for benchmarking of all aspects of your system from APIs, libraries, software builds and release candidates. This means that you can easily understand from a DevOps perspective, which aspects of your system are likely to be the most resource-intensive and where further changes and optimizations can be made.

Increase the Quality of your Applications

The business case for performance benchmarking as part of your CI/CD pipeline is bolstered by the fact that identifying where there needs to be improvement will allow for your applications to improve over time, leading to better user experiences for everyone and fewer complaints that need to be dealt with.

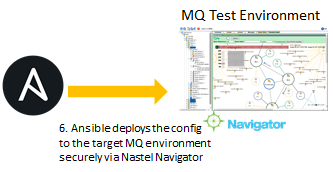

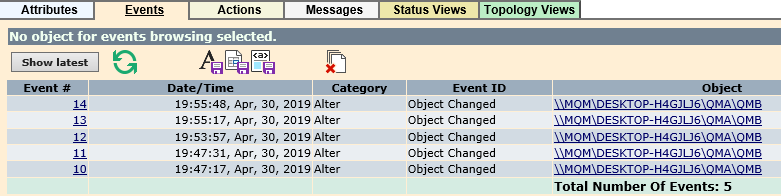

Why Choose Nastel Technologies?

Nastel has developed CyBench, which is the world’s number one performance benchmarking tool. Cybench features a wide range of integration possibilities and works with Maven, Gradle, Jenkins, Eclipse, IntelliJ, GitHub and more.

With CyBench, it is possible to benchmark your entire Java stack on any platform in a matter of minutes and compare results across containers, virtual machines and the cloud in seconds. This allows a realistic idea of performance across your entire network.

CyBench allows this elite level of benchmarking without having to code anything and displays the results on an attractive and intuitive graphical user interface. What this means for your business is that you will be able to deliver 10x software faster and more efficiently than ever before. Integrating Cybench as part of your CI/CD pipeline will allow you to streamline your integration process and implement rigorous regression testing.

r the last three years or so and have seen dramatic changes in the

r the last three years or so and have seen dramatic changes in the